doris manager: 24.x

doris: 3.0

部署模式: 存算一体

kafka表的数据格式:

{'pageBatchID': '', 'uniq_array': ['feature3:19.31,19.39,19.34,19.39,19.36,19.39,19.39,19.36,19.39,19.37,19.34,19.31,19.35,19.33,19.35,19.35,19.42,19.37,19.35,19.37,19.37,19.34,19.34,19.34',

'feature2:23.71,23.79,23.68,23.65,23.62,23.67,23.67,23.76,23.73,23.69,23.6,23.49,23.68,23.68,23.52,23.6,23.87,23.8,23.61,23.7,23.72,23.49,23.68,23.58',

'meanHf:1216900104915',

'mean:255675728',

'std:7667',

'customFeature:',

'meanLf:19047538128',

'temperature:-274',

'bandSpectrum:21.58,20.05,19.57,19.56,19.84,19.77,20.05,19.51,19.21,19.03,19.33,18.58,18.25,17.94,17.45,17.25,16.91,16.4,15.88,15.4',

'feature4:8.97,8.91,9.02,8.97,8.96,9.01,8.97,8.99,8.97,8.98,8.87,8.89,8.97,8.91,8.9,8.89,9.03,9.02,8.83,8.98,8.97,8.84,8.91,8.84',

'extend:{"SerialData":"","GpioData":-1}',

'feature1:27.75,27.81,27.77,27.81,27.82,27.86,27.85,27.82,27.82,27.84,27.84,27.81,27.83,27.81,27.86,27.84,27.83,27.84,27.82,27.84,27.87,27.86,27.82,27.83',

'peakFreqs:4.54,5.6,5.96,6.15,6.49,6.65,6.76,6.87,7.03,7.13,8.03,8.56,9.0,9.39',

'peakPowers:18.43,17.56,17.36,17.11,17.37,17.21,17.01,16.62,17.97,16.45,16.36,16.14,16.53,16.48'],

'time': 0, 'nodeId': 852, 'uuid': '704A0ED3C5CA', 'device_time': '2023-03-24T10:00:00'}

doris表格式:

CREATE TABLE iot_kafka (

`node_id` bigint NOT NULL COMMENT '点位 ID',

`device_time` DATETIME NOT NULL COMMENT '时间',

`device_timestamp` bigint NULL COMMENT '时间',

`uuid` varchar(30) NULL COMMENT 'uuid',

`iot_json` STRING NULL COMMENT 'iot_json',

`create_time` DATETIMEV2 NOT null default CURRENT_TIMESTAMP COMMENT '时间'

)

ENGINE=OLAP

DUPLICATE KEY(node_id,device_time,device_timestamp)

AUTO PARTITION BY RANGE (DATE_TRUNC(device_time, 'hour'))()

DISTRIBUTED BY HASH(`node_id`) BUCKETS 10

PROPERTIES (

"dynamic_partition.time_unit"="hour",

"dynamic_partition.start" = "-1",

"dynamic_partition.end" = "1",

"dynamic_partition.prefix" = "p",

"dynamic_partition.enable" = "true",

"dynamic_partition.replication_num" = "1",

"dynamic_partition.time_zone" = "Asia/Shanghai",

"compression" = "zstd"

);

CREATE ROUTINE LOAD iot_kafka_load_test_compare_v1 ON iot_kafka

COLUMNS(iot_json,node_id=json_extract(iot_json, '$.nodeId'),device_timestamp=json_extract(iot_json, '$.time'),uuid=json_extract(iot_json, '$.uuid'),device_time=from_unixtime(device_timestamp/1000))

FROM KAFKA(

"kafka_broker_list" = "xxx.xxx.xxx.xxx:30092",

"kafka_topic" = "ddps.ts_kv_iot_data_test_v1",

"property.group.id" = "doris_job",

"property.kafka_default_offsets" = "OFFSET_END"

);

show routine load TASK where jobname = 'iot_kafka_load_test_compare_v1'

RESUME ROUTINE LOAD for iot_kafka_load_test_compare_v1

select max(device_time) from iot_kafka group by node_id

CREATE TABLE iot_main (

`node_id` bigint NOT NULL COMMENT '点位 ID',

`device_time` DATETIME NOT NULL COMMENT '时间',

`key_id` varchar(64) not NULL COMMENT 'key',

`device_timestamp` bigint not NULL COMMENT '时间',

`uuid` varchar(32) NULL COMMENT 'uuid',

`key_v` STRING NULL COMMENT 'v',

`d_key_v` double null COMMENT 'double v',

`array_key_v` array<double> null COMMENT 'array v',

`create_time` DATETIMEV2 NOT null default CURRENT_TIMESTAMP COMMENT '时间'

)

ENGINE=OLAP

UNIQUE KEY(node_id,device_time,key_id,device_timestamp)

AUTO PARTITION BY RANGE (DATE_TRUNC(device_time, 'day'))()

DISTRIBUTED BY HASH(`node_id`) BUCKETS 10

PROPERTIES (

"replication_num" = "1",

"bloom_filter_columns"="node_id,key_id",

"compression" = "zlib"

-- "storage_vault_name" = "minio_storage_vault"

);

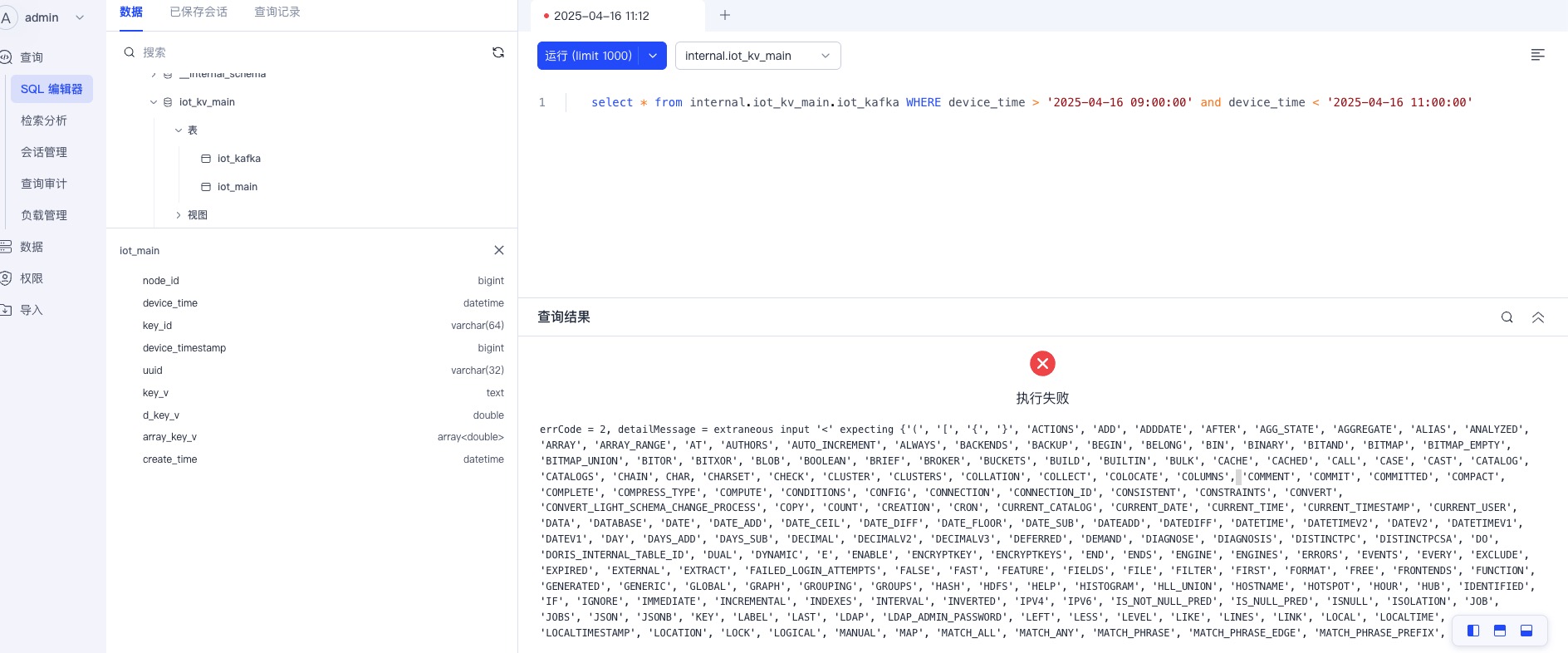

报错如下:

{

"data": {

"key": "b5f83739-0db2-43dc-9659-28cd8254b26f",

"sqlSessionId": "53ae75f9-74ff-4c2e-b358-a5284a6cbb26"

},

"code": 0,

"message": "errCode = 2, detailMessage = \nextraneous input '<' expecting {'(', '[', '{', '}', 'ACTIONS', 'ADD', 'ADDDATE', 'AFTER', 'AGG_STATE', 'AGGREGATE', 'ALIAS', 'ANALYZED', 'ARRAY', 'ARRAY_RANGE', 'AT', 'AUTHORS', 'AUTO_INCREMENT', 'ALWAYS', 'BACKENDS', 'BACKUP', 'BEGIN', 'BELONG', 'BIN', 'BINARY', 'BITAND', 'BITMAP', 'BITMAP_EMPTY', 'BITMAP_UNION', 'BITOR', 'BITXOR', 'BLOB', 'BOOLEAN', 'BRIEF', 'BROKER', 'BUCKETS', 'BUILD', 'BUILTIN', 'BULK', 'CACHE', 'CACHED', 'CALL', 'CASE', 'CAST', 'CATALOG', 'CATALOGS', 'CHAIN', CHAR, 'CHARSET', 'CHECK', 'CLUSTER', 'CLUSTERS', 'COLLATION', 'COLLECT', 'COLOCATE', 'COLUMNS', 'COMMENT', 'COMMIT', 'COMMITTED', 'COMPACT', 'COMPLETE', 'COMPRESS_TYPE', 'COMPUTE', 'CONDITIONS', 'CONFIG', 'CONNECTION', 'CONNECTION_ID', 'CONSISTENT', 'CONSTRAINTS', 'CONVERT', 'CONVERT_LIGHT_SCHEMA_CHANGE_PROCESS', 'COPY', 'COUNT', 'CREATION', 'CRON', 'CURRENT_CATALOG', 'CURRENT_DATE', 'CURRENT_TIME', 'CURRENT_TIMESTAMP', 'CURRENT_USER', 'DATA', 'DATABASE', 'DATE', 'DATE_ADD', 'DATE_CEIL', 'DATE_DIFF', 'DATE_FLOOR', 'DATE_SUB', 'DATEADD', 'DATEDIFF', 'DATETIME', 'DATETIMEV2', 'DATEV2', 'DATETIMEV1', 'DATEV1', 'DAY', 'DAYS_ADD', 'DAYS_SUB', 'DECIMAL', 'DECIMALV2', 'DECIMALV3', 'DEFERRED', 'DEMAND', 'DIAGNOSE', 'DIAGNOSIS', 'DISTINCTPC', 'DISTINCTPCSA', 'DO', 'DORIS_INTERNAL_TABLE_ID', 'DUAL', 'DYNAMIC', 'E', 'ENABLE', 'ENCRYPTKEY', 'ENCRYPTKEYS', 'END', 'ENDS', 'ENGINE', 'ENGINES', 'ERRORS', 'EVENTS', 'EVERY', 'EXCLUDE', 'EXPIRED', 'EXTERNAL', 'EXTRACT', 'FAILED_LOGIN_ATTEMPTS', 'FALSE', 'FAST', 'FEATURE', 'FIELDS', 'FILE', 'FILTER', 'FIRST', 'FORMAT', 'FREE', 'FRONTENDS', 'FUNCTION', 'GENERATED', 'GENERIC', 'GLOBAL', 'GRAPH', 'GROUPING', 'GROUPS', 'HASH', 'HDFS', 'HELP', 'HISTOGRAM', 'HLL_UNION', 'HOSTNAME', 'HOTSPOT', 'HOUR', 'HUB', 'IDENTIFIED', 'IF', 'IGNORE', 'IMMEDIATE', 'INCREMENTAL', 'INDEXES', 'INTERVAL', 'INVERTED', 'IPV4', 'IPV6', 'IS_NOT_NULL_PRED', 'IS_NULL_PRED', 'ISNULL', 'ISOLATION', 'JOB', 'JOBS', 'JSON', 'JSONB', 'KEY', 'LABEL', 'LAST', 'LDAP', 'LDAP_ADMIN_PASSWORD', 'LEFT', 'LESS', 'LEVEL', 'LIKE', 'LINES', 'LINK', 'LOCAL', 'LOCALTIME', 'LOCALTIMESTAMP', 'LOCATION', 'LOCK', 'LOGICAL', 'MANUAL', 'MAP', 'MATCH_ALL', 'MATCH_ANY', 'MATCH_PHRASE', 'MATCH_PHRASE_EDGE', 'MATCH_PHRASE_PREFIX', 'MATCH_REGEXP', 'MATERIALIZED', 'MAX', 'MEMO', 'MERGE', 'MIGRATE', 'MIGRATIONS', 'MIN', 'MINUTE', 'MODIFY', 'MONTH', 'MTMV', 'NAME', 'NAMES', 'NEGATIVE', 'NEVER', 'NEXT', 'NGRAM_BF', 'NO', 'NON_NULLABLE', 'NULL', 'NULLS', 'OF', 'OFFSET', 'ONLY', 'OPEN', 'OPTIMIZED', 'PARAMETER', 'PARSED', 'PARTITIONS', 'PASSWORD', 'PASSWORD_EXPIRE', 'PASSWORD_HISTORY', 'PASSWORD_LOCK_TIME', 'PASSWORD_REUSE', 'PATH', 'PAUSE', 'PERCENT', 'PERIOD', 'PERMISSIVE', 'PHYSICAL', 'PI', '?', 'PLAN', 'PRIVILEGES', 'PROCESS', 'PLUGIN', 'PLUGINS', 'POLICY', 'PROC', 'PROCESSLIST', 'PROFILE', 'PROPERTIES', 'PROPERTY', 'QUANTILE_STATE', 'QUANTILE_UNION', 'QUERY', 'QUOTA', 'RANDOM', 'RECENT', 'RECOVER', 'RECYCLE', 'REFRESH', 'REGEXP', 'REPEATABLE', 'REPLACE', 'REPLACE_IF_NOT_NULL', 'REPLAYER', 'REPOSITORIES', 'REPOSITORY', 'RESOURCE', 'RESOURCES', 'RESTORE', 'RESTRICTIVE', 'RESUME', 'RETURNS', 'REWRITTEN', 'RIGHT', 'RLIKE', 'ROLLBACK', 'ROLLUP', 'ROUTINE', 'S3', 'SAMPLE', 'SCHEDULE', 'SCHEDULER', 'SCHEMA', 'SECOND', 'SEQUENCE', 'SERIALIZABLE', 'SESSION', 'SESSION_USER', 'SET_SESSION_VARIABLE', 'SHAPE', 'SKEW', 'SNAPSHOT', 'SONAME', 'SPLIT', 'SQL', 'STAGE', 'STAGES', 'START', 'STARTS', 'STATS', 'STATUS', 'STOP', 'STORAGE', 'STREAM', 'STREAMING', 'STRING', 'STRUCT', 'SUBDATE', 'SUM', 'TABLES', 'TASK', 'TASKS', 'TEMPORARY', 'TEXT', 'THAN', 'TIME', 'TIMESTAMP', 'TIMESTAMPADD', 'TIMESTAMPDIFF', 'TRANSACTION', 'TREE', 'TRIGGERS', 'TRIM', 'TRUE', 'TRUNCATE', 'TYPE', 'TYPES', 'UNCOMMITTED', 'UNLOCK', 'UNSET', 'UP', 'USER', 'VALUE', 'VARCHAR', 'VARIABLE', 'VARIABLES', 'VARIANT', 'VAULT', 'VAULTS', 'VERBOSE', 'VERSION', 'VIEW', 'VIEWS', 'WARM', 'WARNINGS', 'WEEK', 'WORK', 'YEAR', '+', '-', '*', '~', '/*+', '*/', '/*', '@', '@@', STRING_LITERAL, INTEGER_VALUE, EXPONENT_VALUE, DECIMAL_VALUE, IDENTIFIER, BACKQUOTED_IDENTIFIER}(line 1, pos 105)\n"

}

麻烦社区的同学看看,谢谢