1.基本信息

doris版本:2.1.9,2.1.10

2.报错信息

现象:数据写入失败

{

"TxnId": 62318,

"Label": "_saas_vehicle_iot_1045_realtime_dwd_security_event_data_0_157_a7730012-e9b7-4f12-817a-d3f98df8569e",

"Comment": "",

"TwoPhaseCommit": "false",

"Status": "Fail",

"Message": "[ANALYSIS_ERROR]TStatus: errCode = 2, detailMessage = column has no source field, column=mva_SUM__CASE WHEN 1 IS NULL THEN 0 ELSE 1 END",

"NumberTotalRows": 0,

"NumberLoadedRows": 0,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 0,

"LoadTimeMs": 0,

"BeginTxnTimeMs": 0,

"StreamLoadPutTimeMs": 2,

"ReadDataTimeMs": 0,

"WriteDataTimeMs": 0,

"ReceiveDataTimeMs": 0,

"CommitAndPublishTimeMs": 0

}

3.复现过程

- 创建表

CREATE TABLE `realtime_dwd_security_event_data` (

`process_time` datetime NOT NULL COMMENT "入库时间",

`happen_time` datetime NOT NULL COMMENT "happen time",

`vin` varchar(128) NULL COMMENT "车辆唯一标识",

`kafka_partition` int NOT NULL COMMENT "kafka topic partitions",

`event_level` int NOT NULL DEFAULT "0" COMMENT "事件级别",

`part_type` int NULL COMMENT "风险类型",

`risk_type` int NULL COMMENT "风险类型",

`risk_subtype` int NULL COMMENT "风险子类型",

`kafka_topic` varchar(256) NULL COMMENT "kafka topic",

`kafka_offset` bigint NULL COMMENT "kafka topic partitions offset",

`kafka_timestamp` bigint NULL COMMENT "kafka timestamp",

`vehicle_brand_name` varchar(128) NULL COMMENT "车品牌",

`vehicle_series_name` varchar(128) NULL COMMENT "车系",

`vehicle_model_name` varchar(128) NULL COMMENT "车型",

`vehicle_brand_id` bigint NULL COMMENT "车品牌id",

`vehicle_series_id` bigint NULL COMMENT "车系id",

`vehicle_model_id` bigint NULL COMMENT "车型id",

`metri_tag_pk_id` bigint NOT NULL COMMENT "唯一id",

`event_id` varchar(64) NULL COMMENT "事件id,唯一值",

`process_time_ms` bigint NULL COMMENT "入库时间戳",

`source_ip` varchar(128) NULL COMMENT "source_ip",

`target_ip` varchar(128) NULL COMMENT "target_ip",

`event_name` varchar(128) NULL COMMENT "事件名称",

`message` text NULL COMMENT "事件详情",

`model_id` bigint NULL COMMENT "碰撞模型id",

`tenant_id` bigint NULL COMMENT "租户id",

`device_receive_time` bigint NULL COMMENT "设备服务接收时间",

`split_id` bigint NULL COMMENT "拆分规则id",

`rule_ids` text NULL COMMENT "规则模型碰撞id",

`other_field` text NULL COMMENT "数据表转化字段",

`ids_type` varchar(128) NULL COMMENT "IDS节点厂商",

`ids_name` varchar(128) NULL COMMENT "IDS节点名称",

`ids_version` varchar(128) NULL COMMENT "IDS节点版本",

`part_id` varchar(128) NULL COMMENT "part_id",

`ota_version` varchar(128) NULL COMMENT "ota版本",

`software_version` varchar(128) NULL COMMENT "软件版本",

`hardware_version` varchar(128) NULL COMMENT "硬件版本",

`match_number` int NULL DEFAULT "0" COMMENT "碰撞次数",

`match_resource_id` text NULL COMMENT "统计模型碰撞源id",

`window_time` text NULL COMMENT "窗口时间",

`match_type` int NULL COMMENT "1-解析规则,2-规则模型,3-统计模型,4-关联模型",

`xq_text` text NULL,

`xq_int` int NULL,

`xq_varhcar` varchar(32) NULL,

`xqq_string` text NULL,

`xqq_intt` int NULL,

`xqq_decimal` decimal(38,10) NULL,

`xq1_varhcar` varchar(32) NULL,

`xq_datetime` datetime NULL,

`xq_date` date NULL,

`xq_decimal` decimal(38,10) NULL,

`xq_bigint` bigint NULL,

`xq_intt` int NULL,

`xq_smallint` smallint NULL,

`xq_tinyint` tinyint NULL,

`xqtestzuhu` decimal(38,10) NULL,

`xqtttt` decimal(5,4) NULL,

`xqtinyy` tinyint NULL,

`xqtest` tinyint NULL,

`xqtestt` text NULL,

INDEX idx_vehicle_brand (`vehicle_brand_name`) USING INVERTED PROPERTIES("parser" = "unicode", "lower_case" = "true", "support_phrase" = "true"),

INDEX idx_vehicle_series (`vehicle_series_name`) USING INVERTED PROPERTIES("parser" = "unicode", "lower_case" = "true", "support_phrase" = "true"),

INDEX idx_vehicle_model (`vehicle_model_name`) USING INVERTED PROPERTIES("parser" = "unicode", "lower_case" = "true", "support_phrase" = "true")

) ENGINE=OLAP

DUPLICATE KEY(`process_time`, `happen_time`, `vin`, `kafka_partition`)

PARTITION BY RANGE(`process_time`)

(PARTITION p20250530 VALUES [('2025-05-30 00:00:00'), ('2025-05-31 00:00:00')),

PARTITION p20250531 VALUES [('2025-05-31 00:00:00'), ('2025-06-01 00:00:00')),

PARTITION p20250601 VALUES [('2025-06-01 00:00:00'), ('2025-06-02 00:00:00')),

PARTITION p20250602 VALUES [('2025-06-02 00:00:00'), ('2025-06-03 00:00:00')),

PARTITION p20250603 VALUES [('2025-06-03 00:00:00'), ('2025-06-04 00:00:00')),

PARTITION p20250604 VALUES [('2025-06-04 00:00:00'), ('2025-06-05 00:00:00')),

PARTITION p20250605 VALUES [('2025-06-05 00:00:00'), ('2025-06-06 00:00:00')),

PARTITION p20250606 VALUES [('2025-06-06 00:00:00'), ('2025-06-07 00:00:00')),

PARTITION p20250607 VALUES [('2025-06-07 00:00:00'), ('2025-06-08 00:00:00')),

PARTITION p20250608 VALUES [('2025-06-08 00:00:00'), ('2025-06-09 00:00:00')),

PARTITION p20250609 VALUES [('2025-06-09 00:00:00'), ('2025-06-10 00:00:00')),

PARTITION p20250610 VALUES [('2025-06-10 00:00:00'), ('2025-06-11 00:00:00')),

PARTITION p20250611 VALUES [('2025-06-11 00:00:00'), ('2025-06-12 00:00:00')),

PARTITION p20250612 VALUES [('2025-06-12 00:00:00'), ('2025-06-13 00:00:00')),

PARTITION p20250613 VALUES [('2025-06-13 00:00:00'), ('2025-06-14 00:00:00')),

PARTITION p20250614 VALUES [('2025-06-14 00:00:00'), ('2025-06-15 00:00:00')),

PARTITION p20250615 VALUES [('2025-06-15 00:00:00'), ('2025-06-16 00:00:00')),

PARTITION p20250616 VALUES [('2025-06-16 00:00:00'), ('2025-06-17 00:00:00')),

PARTITION p20250617 VALUES [('2025-06-17 00:00:00'), ('2025-06-18 00:00:00')),

PARTITION p20250618 VALUES [('2025-06-18 00:00:00'), ('2025-06-19 00:00:00')))

DISTRIBUTED BY HASH(`kafka_partition`) BUCKETS AUTO

PROPERTIES (

"replication_allocation" = "tag.location.default: 1",

"min_load_replica_num" = "-1",

"is_being_synced" = "false",

"dynamic_partition.enable" = "true",

"dynamic_partition.time_unit" = "day",

"dynamic_partition.time_zone" = "Asia/Shanghai",

"dynamic_partition.start" = "-2147483648",

"dynamic_partition.end" = "7",

"dynamic_partition.prefix" = "p",

"dynamic_partition.replication_allocation" = "tag.location.default: 1",

"dynamic_partition.buckets" = "10",

"dynamic_partition.create_history_partition" = "false",

"dynamic_partition.history_partition_num" = "-1",

"dynamic_partition.hot_partition_num" = "0",

"dynamic_partition.reserved_history_periods" = "NULL",

"dynamic_partition.storage_policy" = "",

"storage_medium" = "hdd",

"storage_format" = "V2",

"inverted_index_storage_format" = "V1",

"light_schema_change" = "true",

"disable_auto_compaction" = "false",

"enable_single_replica_compaction" = "false",

"group_commit_interval_ms" = "10000",

"group_commit_data_bytes" = "134217728"

);

- 创建物化视图

CREATE materialized VIEW indicator_30f66e7df2ed4cc89a257323509527c3 AS SELECT

DATE_FORMAT( date_add( happen_time, INTERVAL 1 HOUR ), '%Y-%m-%d %H:00:00' ) AS happen_time,

vin,

count( 1 ) AS vsocStatisticValue

FROM

realtime_dwd_security_event_data

WHERE

process_time > '2025-06-12 00:00:00'

AND event_name IS NOT NULL

GROUP BY

DATE_FORMAT( date_add( happen_time, INTERVAL 1 HOUR ), '%Y-%m-%d %H:00:00' ),

vin;

- 备份表及从快照中恢复

1. 创建备份仓库

CREATE REPOSITORY `test_repo`

WITH HDFS

ON LOCATION "hdfs://bigdata01:8020/doris-repo/"

PROPERTIES

(

"fs.defaultFS"="hdfs://bigdata01:8020",

"hadoop.username" = "hdfs"

);

2. 生成快照

BACKUP SNAPSHOT saas_vehicle_iot_1045.snapshot_realtime_dwd_security_event_data

TO test_repo

ON (realtime_dwd_security_event_data)

PROPERTIES ("type" = "full");

3. 从快照恢复

RESTORE SNAPSHOT test.snapshot_realtime_dwd_security_event_data

FROM `test_repo`

ON ( `realtime_dwd_security_event_data` )

PROPERTIES

(

"backup_timestamp"="2025-06-11-17-21-20",

"replication_num" = "1",

"reserve_dynamic_partition_enable" = "true"

);

- 使用flink streamload写入

写入失败,报错信息如下

[ANALYSIS_ERROR]TStatus: errCode = 2, detailMessage = column has no source field, column=mva_SUM__CASE WHEN 1 IS NULL THEN 0 ELSE 1 END

4. 分析

-

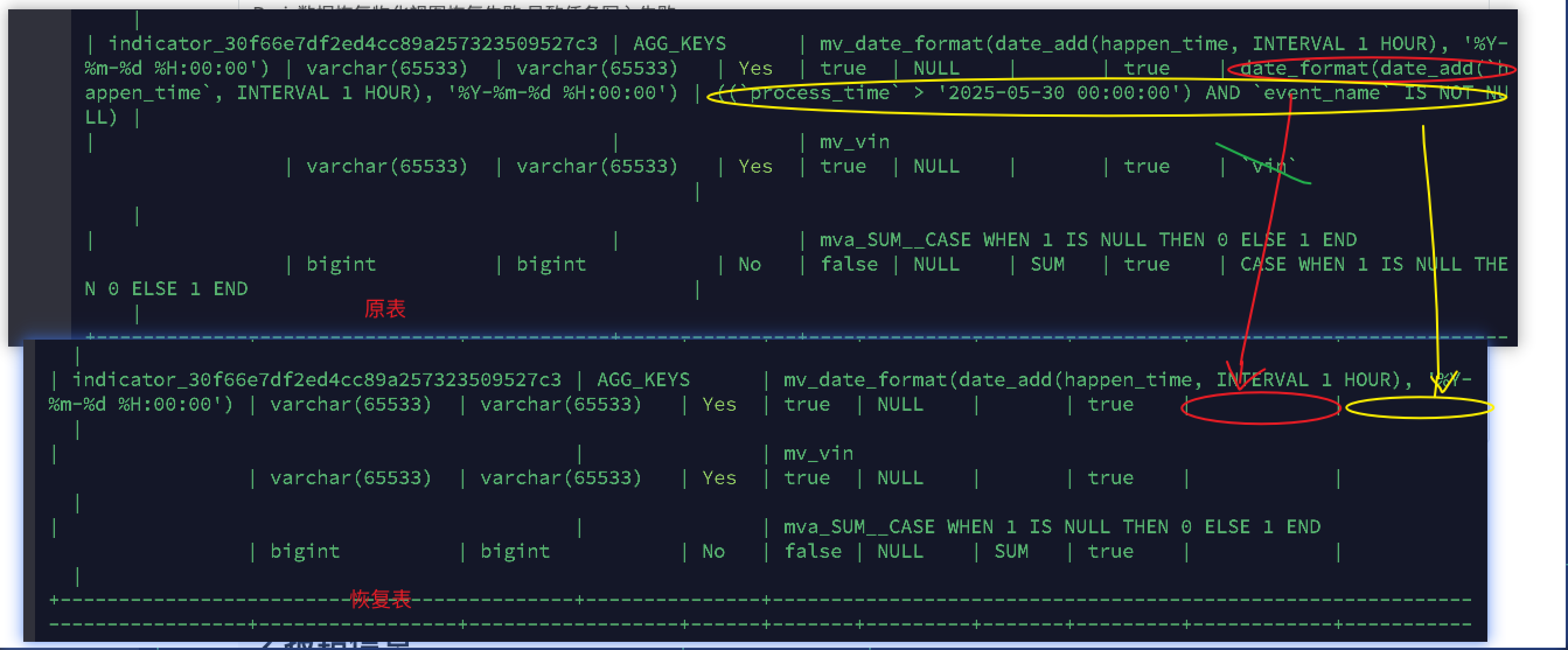

发现原备份表与恢复表物化视图元数据信息不一致

-

下图为原表与恢复表物化视图元数据

-

删除物化视图重建物化视图,则写入成功