1 Answers

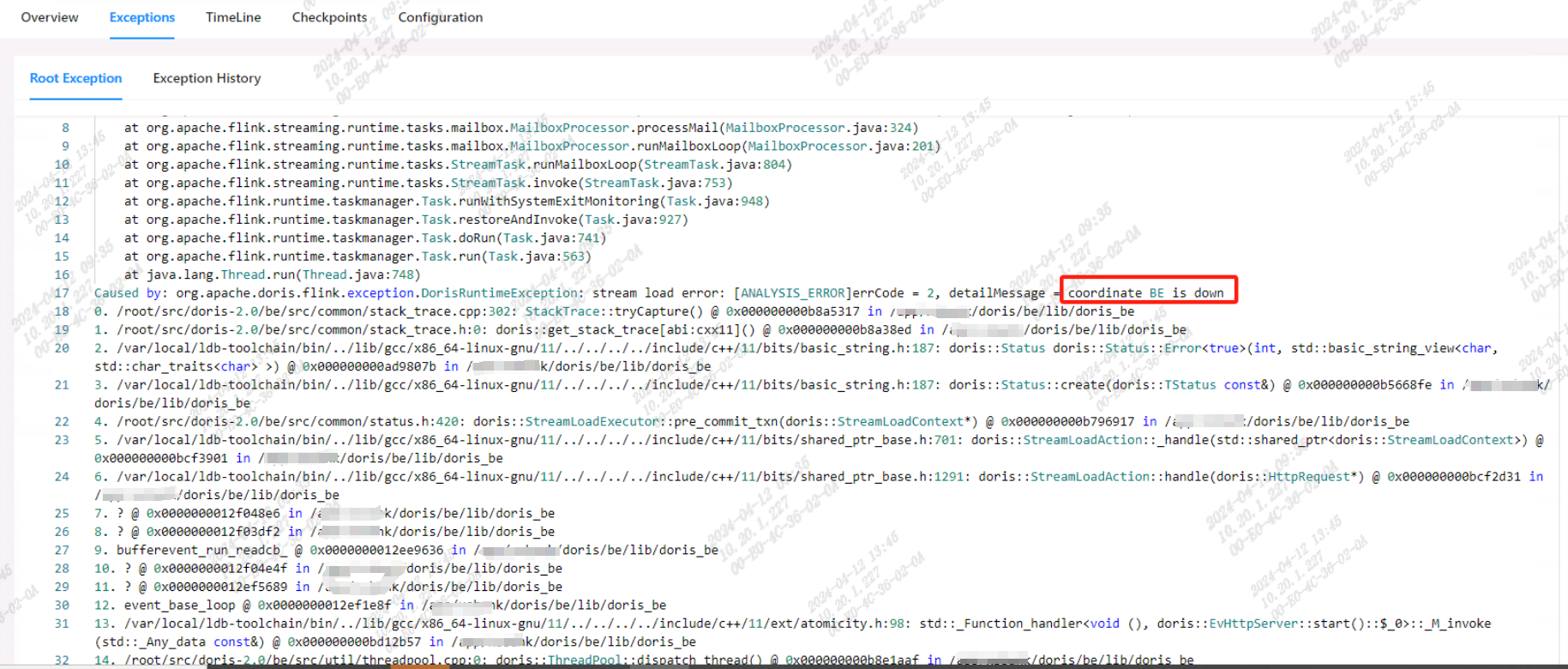

默认的心跳超时时间为5s, 心跳停止后,FE马上abort coordinate BE 的事务。实际上be没有down

然而BE事务在导入过程中并不需要fe的参与,这个5s太敏感了,建议改成超过1分钟没心跳才abort coordinate BE 的事务。

默认的心跳超时时间为5s, 心跳停止后,FE马上abort coordinate BE 的事务。实际上be没有down

然而BE事务在导入过程中并不需要fe的参与,这个5s太敏感了,建议改成超过1分钟没心跳才abort coordinate BE 的事务。