版本:3.0.6

之前通过Logstash写入单副本表是正常的,我drop掉之后重新建了个双副本的表(表结构跟之前是一样的),然后就一直报这个错导入不进去数据,这个跟副本数有关系吗?

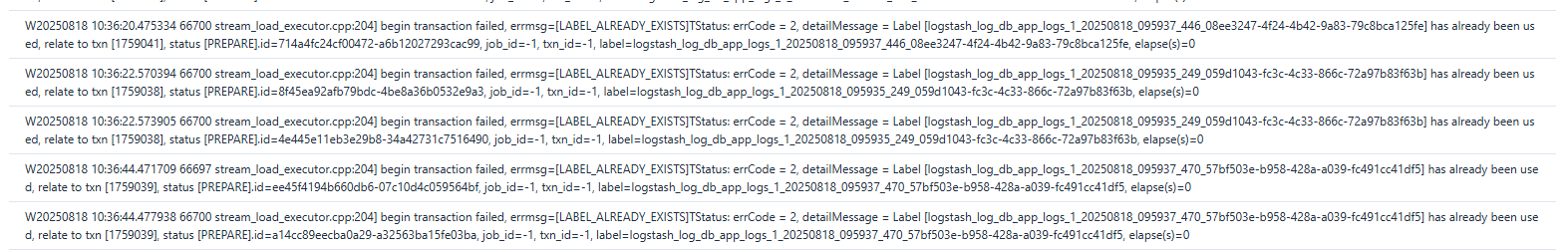

报错如下:

表结构

CREATE TABLE `app_logs_1` (

`log_time` datetime(3),

`node_ip` varchar(20),

`service_name` varchar(256),

`log_level` varchar(10),

`log_content` text,

`stack_trace` text,

`host_name` varchar(50),

`class_method` varchar(512),

`thread_name` varchar(512),

`tx_id` varchar(80),

`span_id` varchar(80),

`log_file_path` varchar(500),

`file_offset` bigint,

`agent_version` varchar(20),

`agent_id` varchar(60),

`ecs_version` varchar(10),

`raw_data` text,

`doris_ingest_time` datetime,

INDEX log_content_idx (`log_content`) USING INVERTED PROPERTIES("parser" = "unicode"),

INDEX stack_trace_idx (`stack_trace`) USING INVERTED,

INDEX class_method_idx (`class_method`) USING INVERTED

)

DUPLICATE KEY(`log_time`)

PARTITION BY RANGE(`log_time`)()

DISTRIBUTED BY RANDOM BUCKETS 96

PROPERTIES (

"replication_num" = 2,

"compression"="zstd",

"compaction_policy"="time_series",

"dynamic_partition.enable" = "true",

"dynamic_partition.time_unit" = "DAY",

"dynamic_partition.start" = "-31",

"dynamic_partition.end" = "3",

"dynamic_partition.prefix" = "p",

"dynamic_partition.buckets" = "96",

"dynamic_partition.create_history_partition" = "true",

"dynamic_partition.history_partition_num" = "14"

);

fe配置

enable_single_replica_load=true

enable_round_robin_create_tablet=true

tablet_rebalancer_type=partition

max_running_txn_num_per_db=10000

streaming_label_keep_max_second=300

label_clean_interval_second=300

max_backend_heartbeat_failure_tolerance_count=10

be配置

write_buffer_size=1073741824

max_tablet_version_num=200000

max_cumu_compaction_threads=6

inverted_index_compaction_enable=true

enable_segcompaction=false

enable_ordered_data_compaction=false

enable_write_index_searcher_cache=false

disable_storage_page_cache=true

enable_single_replica_load=true

streaming_load_json_max_mb=250

trash_file_expire_time_sec=300

path_gc_check_interval_second=900

path_scan_interval_second=900

logstash配置

output {

if "_grokparsefailure" in [tags] {

file {

path => "/dev/null"

}

}

else {

doris {

http_hosts => ["http://xxx:8030", "http://xxx:8030", "http://xxx:8030"]

user => "root"

password => "xxx"

db => "log_db"

table => "app_logs_1"

headers => {

"format" => "json"

"read_json_by_line" => "true"

"load_to_single_tablet" => "true"

"max_filter_ratio" => 1

}

mapping => {

"log_time"=>"%{@timestamp}"

"node_ip"=>"%{NODE_IP}"

"service_name"=>"%{target_topic}"

"log_level"=>"%{[Log_Type]}"

"log_content"=>"%{[Log_info]}"

"host_name"=>"%{[host][name]}"

"class_method"=>"%{[Class]}"

"thread_name"=>"%{[Thread]}"

"log_file_path"=>"%{[log][file][path]}"

"file_offset"=>"%{[log][offset]}"

"agent_version"=>"%{[agent][version]}"

"agent_id"=>"%{[agent][id]}"

"ecs_version"=>"%{[ecs][version]}"

}

log_request => true

}

}

}